User-centered design concept for ERP-integrated User Assistant

User-centered design concept for ERP-integrated Virtual Assistant

🎯Project goal

The main objective of this project was to research user-assistant interaction dynamics within the Sage B7 ERP system.

I worked to answer the following research questions: How to frame help for users by the system? When is the right time for help? How extensive may help messages be?

🌐Approach

I designed a user-centered virtual assistant interaction concept. Later, I socialized it with context-related participants through a participatory workshop.

Thus, I could analyze challenges and opportunities of a user assistant feature for an ERP System. Ultimately, I suggested feature requirements and design recommendations for future design work in this area.

Project Stages

Theoretical background

1. Lessons learned from previous experiences with digital assistants

2. Best practices and design recommendations for timing

3. Best practices and design recommendations for length

4. Best practices and design recommendations for framing

5. Best practices and design recommendations for sense of control

Lessons learned from Clippy

In order to learn from earlier experiences with digital assistants and to understand why Clippy and Rover failed as “digital assistants”, I reviewed the thesis of Luke Swartz’s (2003) “Why People Hate the Paperclip: Labels, Appearance, Behavior, and Social Responses to User Interface Agents.”

Among the diverse findings of this thesis, I selected five main concepts to present the main lessons learned from previous experiences with digital assistants Clippy and Rover, based on the insights that Swartz (2003) established in his work after theoretical, qualitative, and quantitative studies.

Lesson learned #1:

Cognitive labels and explicit system-provided labels can influence the perception and interaction of users with virtual assistants.

Lesson learned #2:

The way users learn about new features of the system and seek help is related to their level of expertise. It’s crucial to provide solutions taking into account different learning styles and users’ know-how.

Lesson learned #3:

The mental model users have about the virtual assistant influences how they interact with it and could generate expectations that sometimes do not correspond to reality.

Lesson learned #4:

Consistency is crucial in the design of verbal and non-verbal traits of an agent, namely the character personality.

Lesson learned #5:

When providing proactive help, the assistant needs to behave coherently with the users’ decisions, therefore after the user declines the offered help, the assistant should respect this decision and not be insistent or intrusive.

Best practices for timing

Timing is a determinant factor when providing help within an enterprise system. To create a list of design recommendations based on previous research, I analyzed the concepts of etiquette and timing, proactive assistance, and task support, according to different authors.

Computers as social actors: etiquette and timing

Design recommendations:

1. The characteristics that reinforce the perception of social interactions, namely language use, interactivity, playing a social role, and having human-sounding speech, suggested by Nass and Steuer (1993), should be considered when determining the right timing for the help provided by virtual assistants.

2. In order to conceive the right timing for user assistants, the question: “What would one want in a human assistant?” should be considered. This could bring insights to the design process following the predictions of CASA theory that interactions between humans and computers are determined by the same psychological rules that apply to interactions between people. (Swartz, 2003)

Proactive assistance and context-awareness

Design recommendations:

1. According to the definition of the key characteristics of an advanced user assistance system by Maedche, A., et al. (2016), it is recommended to consider the following possibilities when designing proactive help in order to follow a timing coherent with users’ needs:

– The virtual assistant should allow users to decide whether or not to follow the provided assistance.

– The virtual assistant should have self-learning capabilities and be context-aware.

– The virtual assistant should be informative about the effects of any offered help or option and any alternative action.

2. Following Xiao, Jun et. al. (2003) recommendations, help by the system should be only suggested when the confidence in offering accurate assistance is very high. For this aim, the techniques for automated analysis of user goals and intentions should be carefully developed and improved over time.

Task support

Design recommendations:

1. The possibility of providing support on task identification, task completion, task recommendation, scheduling, and multi-step accomplishment should be considered when designing a virtual assistant to be embedded in an enterprise system, since task management supported by an intelligent assistant could improve the user experience. (Trippas, J. et al., 2019)

Best practices for length

To examine the best practices regarding the length of help messages in enterprise systems, I conducted a literature review about the concepts of dynamic and user-settable levels, and found one main design recommendation based on previous research.

Dynamic and user settable levels

Design recommendations:

1. An interface compound of slide bars in a multilayer would allow users to customize the help content. Additionally the authors suggest, a rating system for the users to evaluate the help content, and to identify the accuracy of the provided assistance and indicate possible errors and their causes.(Açar, M., & Tekinerdogan, B., 2020)

Best practices for framing

In the article, The framing of decisions and the psychology of choice, Kahneman and Tversky (1981) introduced the concept of framing. They stated that the way a situation is framed or formulated produces “shifts of preference” in the perception of decision-making factors such as problems, probabilities, and outcomes. (Tversky, A. and Kahneman, D., 1981).

Framing effect

Design recommendations:

1. Taking into consideration that the way in which a decision is presented to the users can influence their choices, designers should consider the labeling, and wording, associated with these choices (Cockburn, A. et. al., 2020) Especially in relation to the introduction of virtual assistants in enterprise systems, it would be important to present the assistance stressing positive framing.

2. Risks related to interface choices should be considered when introducing new features. Reducing the risk by making clear the benefits and possible side effects of a new feature, in this case, a virtual assistant, could increase feature adoption and improve user experience.

3. It is pertinent to consider whether and when it is appropriate to employ the framing approach. Designers should reflect on manipulative behaviors and neutrality, taking into account specific contexts. It would be advisable that designers focus on “assisting users in making good decisions regarding the features they enable.” (Cockburn, A. et. al., 2020)

Best practices for sense of control

In the article Direct manipulation vs. Interface agents, Shneiderman and Maes (1997), elaborate on the sense of accomplishment and control that enables the users to feel they have mastery over computers. They claim that virtual agents can reduce users’ sense of control, especially when they are presented as anthropomorphic representations. (Shneiderman & Maes, 1997)

Design recommendations

1. According to Schneiderman as cited in Swartz (2003) the creation of interfaces that encourage “internal locus of control” allow users to feel a sense of mastery over the system. Therefore providing options to customize and set the assistance by the system should be considered when designing a virtual assistant in order to increase users’ sense of control.

2. Agents increase the feelings of control and self-reliance of users when teaching them new skills (Swartz, 2003) Consequently, it should be considered the role of the virtual assistant as a facilitator for the learning of new skills, according to the needs and interests of the users.

Methodology

Design framework

The design framework that I used for this project, is the Design Case Study developed at the University of Siegen by Wulf et al. (2015) which encompasses three main phases.

I focused my design process within the two first phases of the Design Case Study framework, namely Pre-Study, and Design.

PRE-STUDY

An initial understanding of existing technological and organizational practices, and social perspectives is accomplished through means of ethnographic methods, to identify problems, needs, and opportunities.

DESIGN

The application of technological options to a context-specific problem, need, or opportunity is achieved by participatory approaches such as participatory design, usability tests, and design iterations.

APPROPRIATION STUDY

The purpose in the phase is capturing the long-term effects of IT artifacts in specific contexts through reflection with practitioners, repeated study, and joint work with pre-study efforts.

Context

I developed this project during the first semester of my master in Human-Computer Interaction at Universität Siegen under the chair of CSCW and Social Media. I worked specifically within the project ExpertERP of this chair.

The project Expert ERP is funded by the Federal Ministry of Education and Research of Germany (BMBF) and addresses a current problem in the region of Siegerland. According to the Project ExpertERP initial position statement (2022):

“Companies have always faced the challenge of providing their employees with the skills and expertise they need to carry out their work. […] Enterprise resource planning systems (ERP) represent the company’s data foundation and are therefore at the center of operational digitization efforts. In practice, however, there is often a decrease in the functional depth and breadth of these systems with drastic consequences for process efficiency and further digitization efforts.”

As a way to approach this problem, ExpertERP gathers a group of actors to establish a consortium to “research in practice how users acquire ERP systems, i.e. make them usable for their own work practice.” (ExpertERP, 2022)

Research methods

To research user-assistant interaction dynamics within the Sage B7 ERP system, I employed two research approaches.

WORKSHOPS

Two different sessions

Software

Miro + Google Meets

Data collection

Workshop activities

Recordings of open discussions

RESEARCH THROUGH DESIGN

Based on the feedback from the users in the first workshop, I created a design concept to facilitate discussions and reflections among the participants in the second workshop.

→ The project objective and research questions worked as guidelines for the workshops design and development.

WORKSHOP 01

Through the initial workshop in the Pre-Study phase I wanted to gain an understanding and sense of the specific context in order to inform the design of the interaction concept.

Participants: 6 among users and consultants.

Goal: to get to know the users’ thoughts, beliefs, and ideas about virtual assistants for the ERP System.

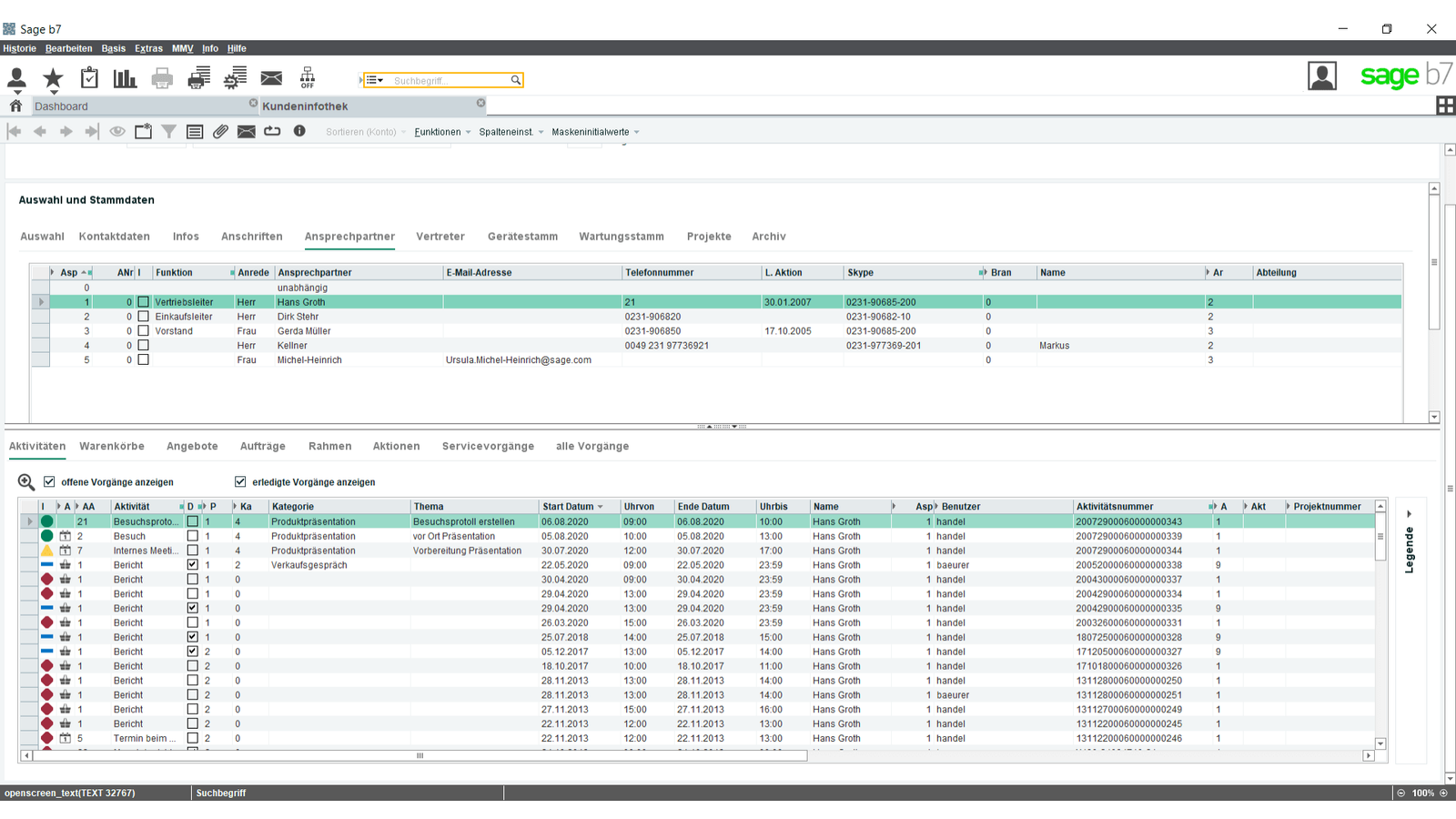

I organized the workshop in two stages. In the first stage, I arranged an introductory presentation about the concept of the virtual assistant, its two primary functions, namely the offer of proactive help and tips, and the options to customize this assistant. In addition to a series of four examples with scenarios familiar to users regarding their current use of the ERP System at the workplace, i.e. the use of “exception masks”, the use of the option “export to excel”, the current “scrolling through extensive tables”, and their current perceptions of the well-known “pop-ups”.

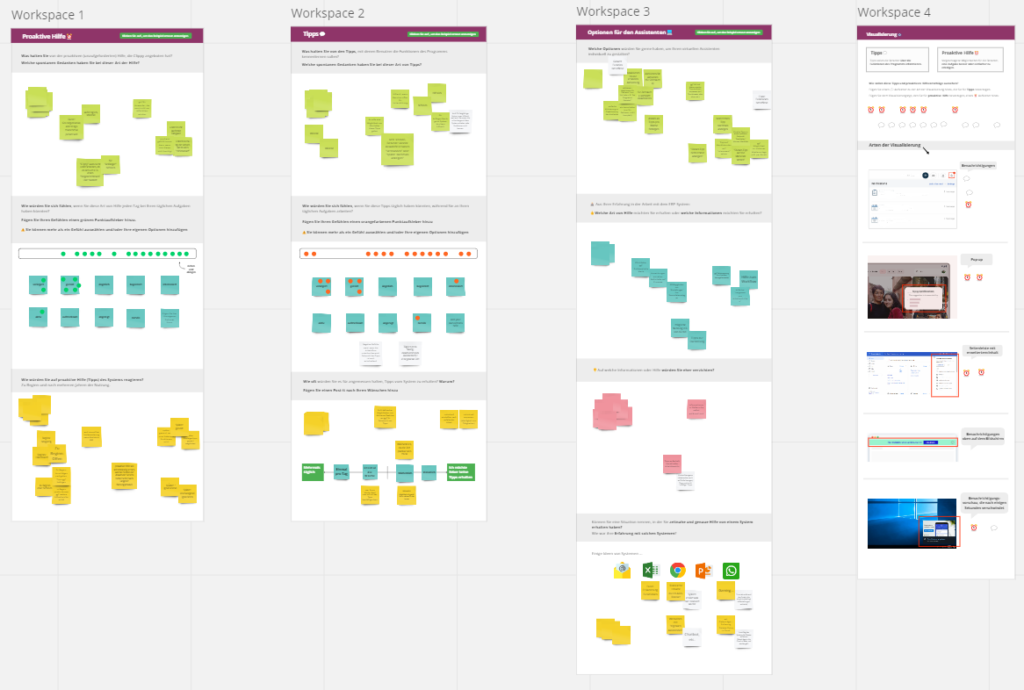

In the second stage, the participants were involved in a series of activities organized in four workspaces, which were guided by a german moderator. The workshop had various activities such as open-ended questions, voting, and timeline diagrams.

The first workspace focused on proactive help, the second workspace dealt with tips from the system, the third workspace was about options to customize the assistant, while the fourth workspace focused on the visualization of help by the system.

WORKSHOP 02

Later in the Design phase, after the generation of the design concept based on the findings of the first workshop, I designed the second workshop.

Participants: 7 among users and consultants.

Goal: to gather feedback and critics and foster a collective reflection on the proposed interaction concept for a virtual assistant.

This workshop was organized in four stages. The first stage was an introductory presentation about the concept of the virtual assistant, and its main roles, namely proactive help and tips, as per Swartz (2003). This short presentation had the intention to remind the participants of the previous workshop about the main topic of work, as well as to introduce new participants to the topic.

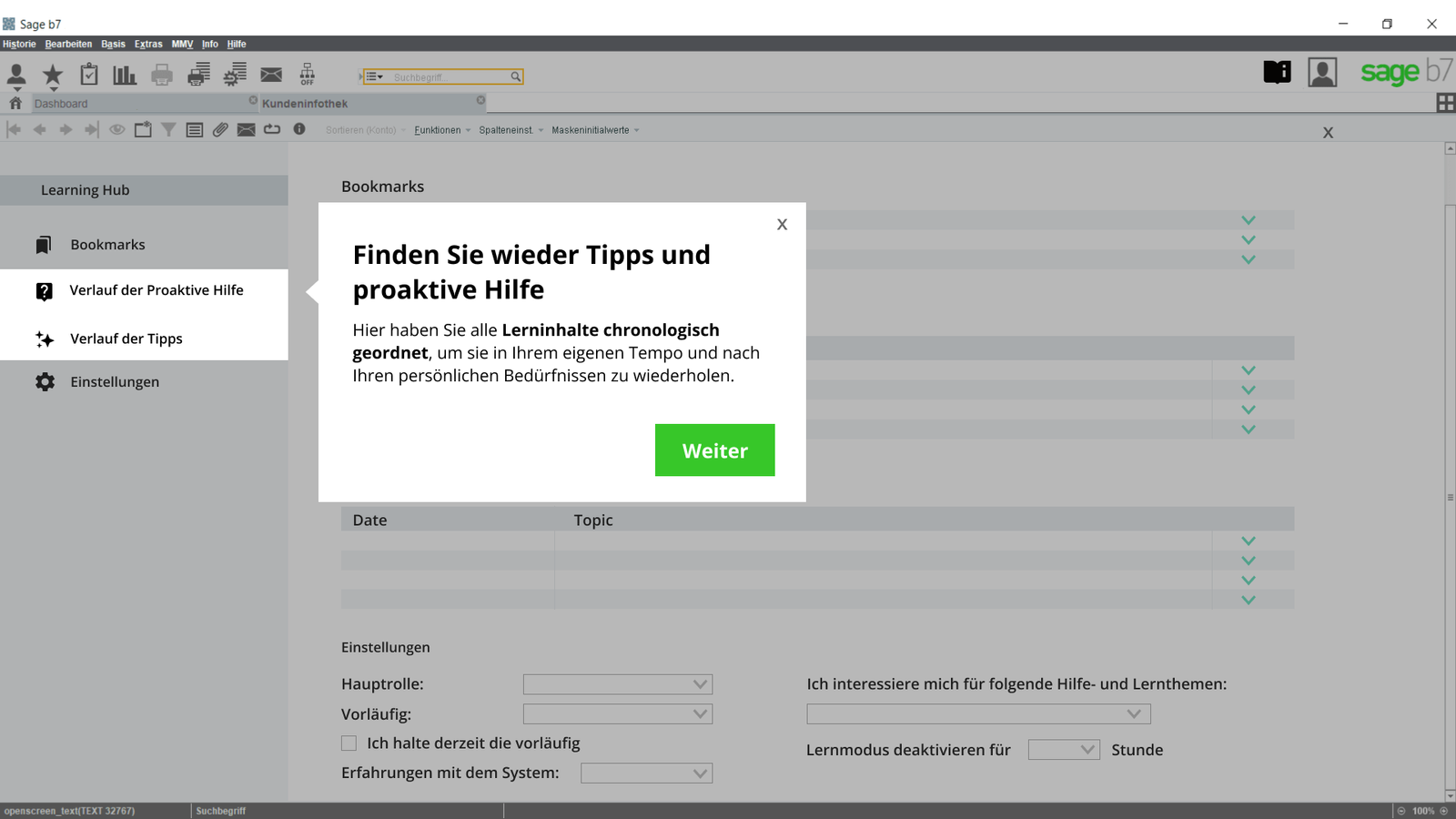

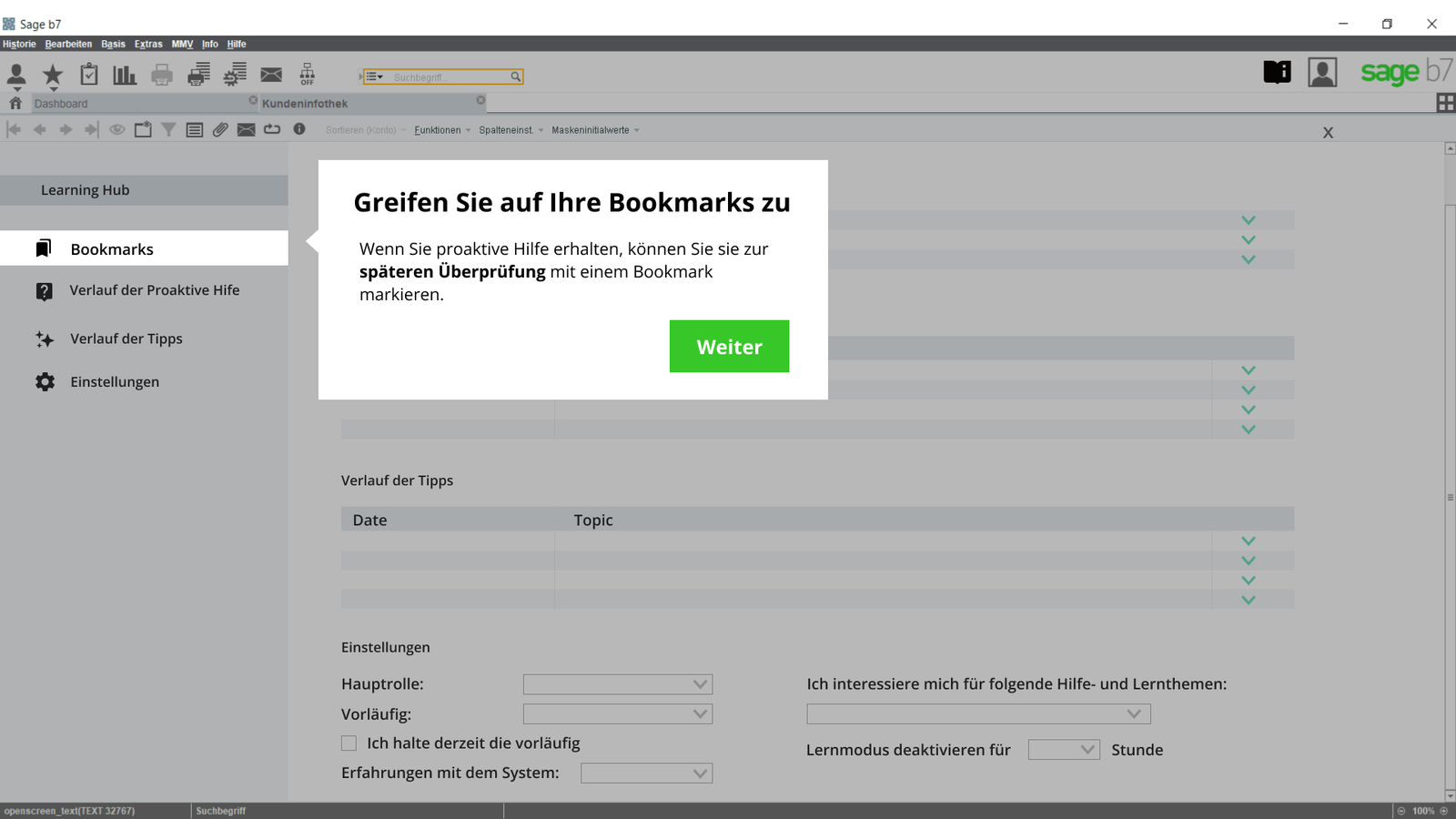

In the second stage, the three flows of the interaction concept were introduced.

First flow: feature announcement and tour

Second flow: proactive help

Third flow: tips

I illustrated this with screenshots of the design concept and elaboration on empirical findings.

Right away, after the second stage, the participants were provided with three links to access the interaction concept prototype on the online platform Figma. They were given time to explore and interact with the three different flows. The intention was to allow them to experience the concept and later ask them for feedback about it.

Finally, in the fourth stage of the workshop, I organized a series of participatory activities in three workspaces, which were conducted by the moderator, who explained each activity, provided time for their completion, and fostered a discussion for each completed task.

Research findings

DATA ANALYSIS

Following the completion of each workshop, I proceeded to analyze the gathered data. Specifically, the responses to the workshop activities were extracted from the Miro board for both sessions and compiled into a document. Additionally, I obtained audio transcripts of both participatory working sessions, and performed a thematic analysis of the entire dataset using the methodology developed by Braun and Clarke (2006), with the assistance of the software MAXQDA. During this process, I identified codes and patterns, and developed themes that served as the foundation for the subsequent design and evaluation of the interaction concept.

THEMES

During the data analysis, I started by grouping together the codes that shared some common consolidating features, following the approach described by Braun and Clarke (2006). Subsequently, I used all the clustered codes to construct themes.

THEMES WORKSHOP 01

Help visualization

Outlines the ways participants perceive the placement and presentation of the help by the system.

Learning time

Focuses on learning times which can vary according to the role and routines of every user.

Positive perceptions toward virtual assistants

Presents the positive perceptions from the participants toward virtual assistants in ERP Systems.

A sense of control over the system

Focuses on the importance of the sense of control over the help system.

Reactance towards repetitive assistance

Outlines the relevance of timely and accurate help that avoids repetitiveness because the users are reluctant to receive already known information.

Expertise and learning

Centers on the implications of the level of experience of the users in terms of the assistance offered by the system.

THEMES WORKSHOP 02

Best timing to learn with the virtual assistant

Outlines the perceptions of the best timing to receive learning content and new feature announcements.

Expertise-sharing dynamics

Focuses on expertise-sharing dynamics mediated by the virtual assistant embedded in the ERP System.

Pop-ups or notifications?

Centers on the visualization options of the help content provided by the system.

Concerns about the Learning Hub

Maps the participants’ feedback on the concept of “Learning Hub” in terms of new possibilities and weaknesses.

Settings preferences

Outlines the settings preferences to customize the assistant mentioned by the users.

Users’ attention

Maps strategies to draw and keep users’ attention.

Framing and writing style

Presents the feedback of framing of help messages by the virtual assistant.

REQUIREMENTS ELICITATION

After conducting the data analysis of the Workshop 01, I moved to the requirements elicitation stage. In this stage, I worked as per the Blending adaptation of Kuechler and Vaishnavi for requirements elicitation within stages of the Design Case Study framework by Syed, H. A., et al. (2022).

I started with the conceptualization phase with the establishment of crude requirements, which I understood as the main design challenges reflected in the themes derived from the empirical study. Then I followed with a derivation phase, where I went a step further and translated the crude requirements into design principles, as strategies to address the initial requirements. And finally, I concluded with the substantiation process and the establishment of design features requirements.

Using the feature requirements as a guide, I proceeded to the next phase of creating the design concept.

Crude requirements

01

The display of help information should influence the willingness and attitude of the user to learn.

02

The system should promote a continuous learning process while being flexible enough to adapt to users’ varying roles and workloads.

03

The system should preserve the user’s initial willingness to interact with the help and learn information.

04

Customization options can enhance the user experience by giving users a sense of control, which is essential.

05

The system should collaborate and not interrupt the user workflow.

06

User preferences and expertise should be the foundation to offer timely and accurate help.

Design concept

DESIGN PRINCIPLES

Following the definition of crude requirements, I worked on the design principles and feature requirements.

All in all, for the requirements elicitation I got to 12 design principles and — feature requirements starting from the initial 6 crude requirements.

Design principles

01

The placement and presentation of the help by the system should allow the users to notice the helping information while working and foster their conscious interaction with it.

02

Learning and help information should be available after their first display and the users should be able to recognize where they can find this information.

03

The system should be aware of the workload of the user to deliver relevant help and learning information.

04

The system should be adjustable to the user preferences according to their performance and training time.

05

The system should foster the co-construction of knowledge between teams and users with different levels of expertise.

06

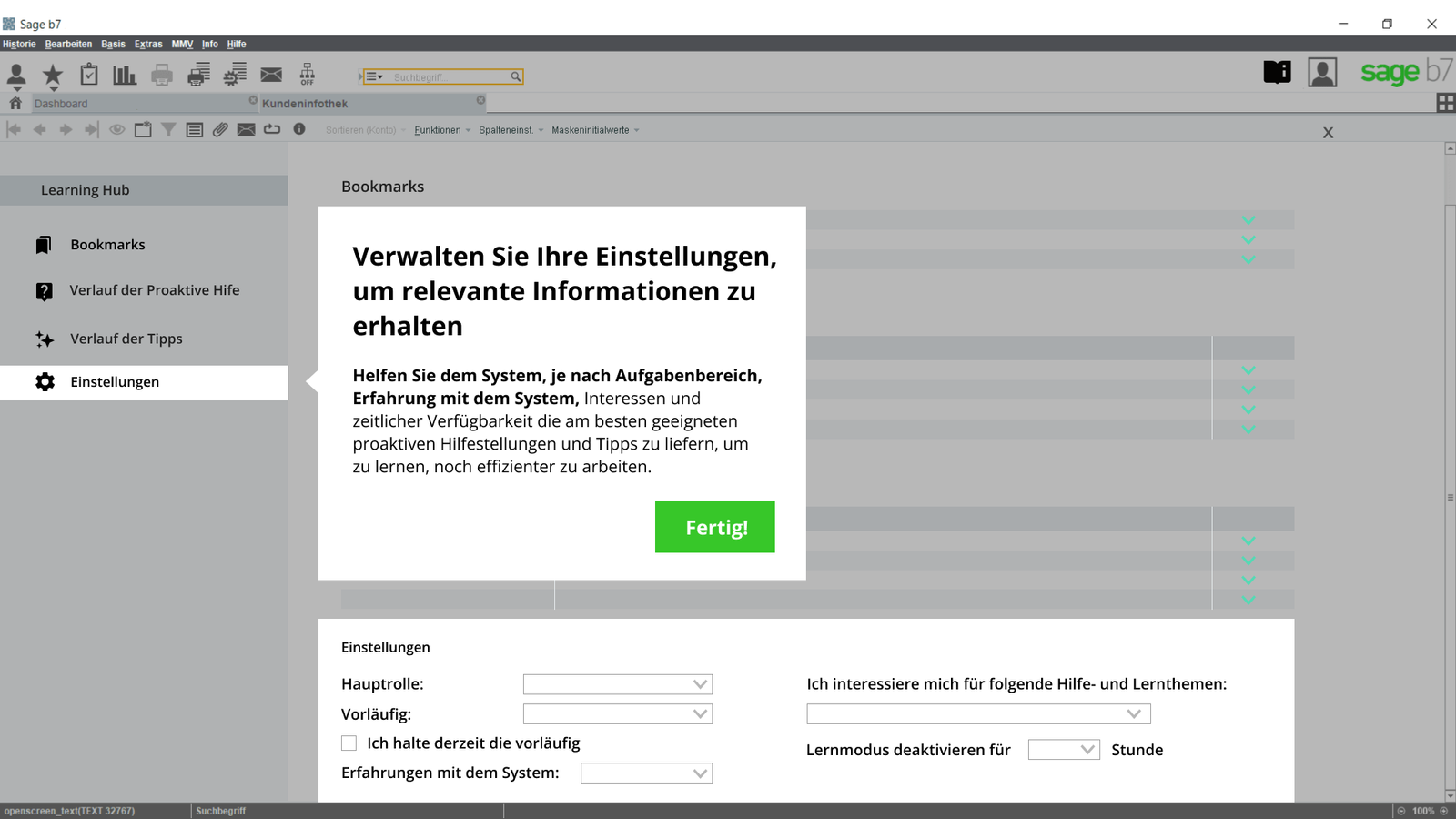

Users should be onboarded and introduced to the learning offerings provided by the system.

07

The users should be introduced to the different preferences settings that they can customize according to their needs.

08

The users should be able to set the periods of time when they are willing to learn.

09

The users should have different options to reply to help and learning information.

10

The system should respect and follow the user’s preferences regarding the salience and frequency of the help information in order to avoid irritating and interrupting them.

11

The system should recognize the users’ data and interests and provide help accordingly.

12

The system should ask for the interests, preferences, and expertise of the users during onboarding to avoid information overload and tips outside of the current domain.

FEATURES

Based on the 12 Design Principles presented above, I worked on a series of features for the design concept of the virtual assistant for the Sage B7 ERP System.

Feature 01

Information on proactive help is displayed as a pop-up in the center of the screen which can be closed, if closed it will remain on the screen as a floating dialog bubble in the downright corner until confirmed or discarded a second time.

Feature 02

Help by the system is framed to positively influence the attitude and willingness of the users to learn.

Feature 03

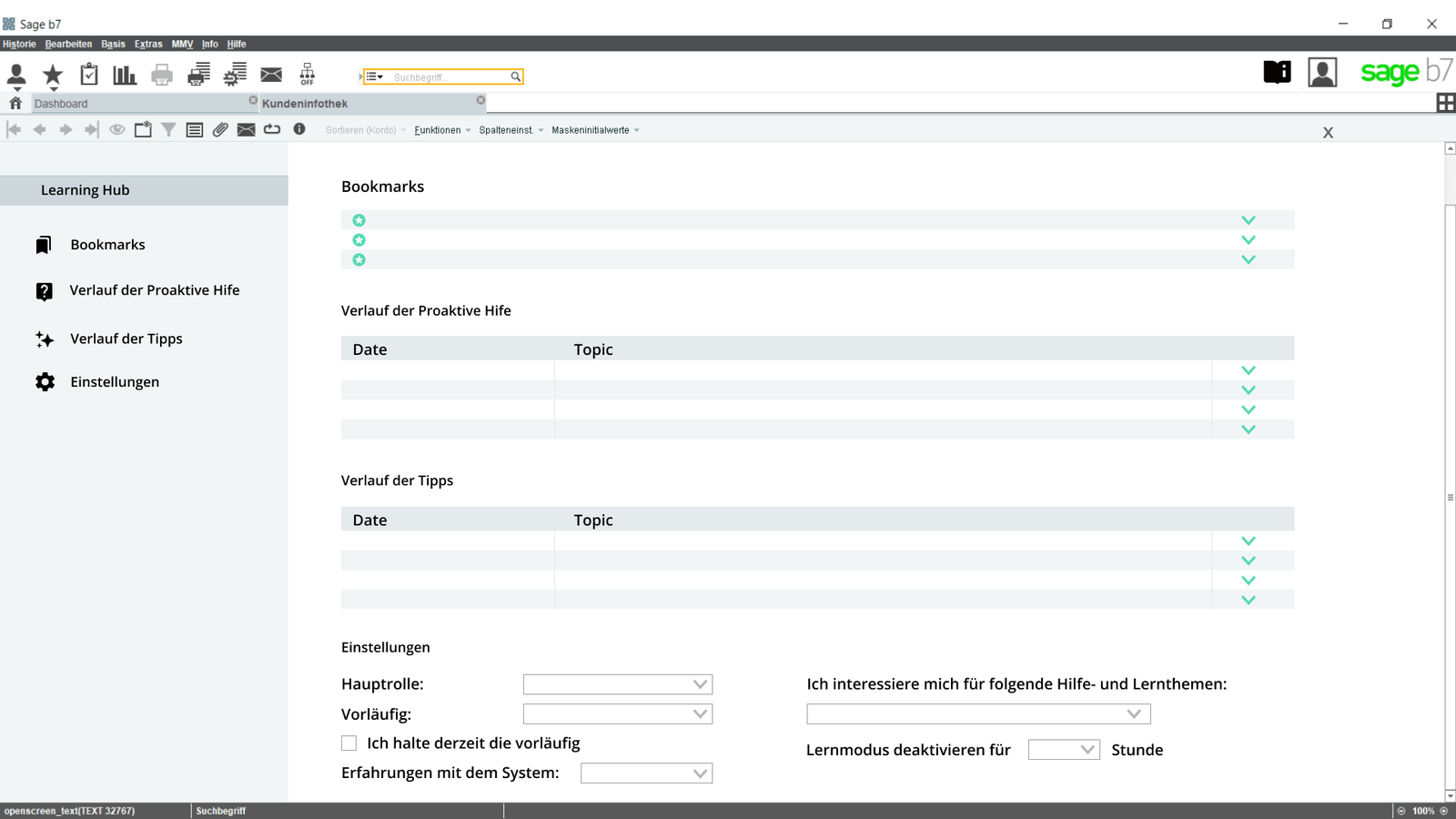

Tips and proactive help can be found on a dedicated screen called “Learning Hub” which can be accessed through the notifications icon on the top-right corner of the navigation bar.

Feature 04

In the Learning Hub, users find new tips and proactive-help information, the history of received tips and proactive help, and the preferences settings.

Feature 05

In the preference settings, the user can specify their primary role, time preferences and learning interests. Additionally, users can change between roles when necessary.

Feature 06

Given the settings and analytics, the system recognizes when the user is in performance or training mode and delivers tips and proactive help accordingly.

Feature 07

An onboarding process will introduce the users to the Learning Hub and the types of help offered by the system.

Feature 08

Users can reply in different ways to pop-ups: show again later, don’t show again, bookmark.

Discussion

learning and expertise

Regarding learning and expertise, Swartz (2003) found that the way users learn about new features and seek help is related to their level of experience with the system, which was also found in the participatory Workshop 01, therefore a series of settings to be customized by the users can be found in the Learning Hub, this way the system should provide accurate help according to their level of expertise with the system and with their interests.

MENTAL MODELS

Swartz (2003), stated as well, that the mental model users have about the virtual assistant influences how they interact with it and could generate expectations that sometimes do not correspond to reality, therefore a feature announcement tour was designed to clearly introduce the new learning and help features to the users.

USER PERCEPTIONS

Additionally, in the study about virtual assistants Clippy and Rover by Swartz, (2003) it was learned that virtual assistants are generally perceived as irritating. During the Workshops 01 and 02, it could be confirmed that users of ERP Systems consider virtual assistants and messaging as a cause of workflow interruption. Therefore alternative ways of communicating help by the system should be considered.

FRAMING EFFECT

Cockburn, A. et al. (2020) analyzed how risk and framing influence users’ choice to enable or disable features in a system, specifically when positive or negative framing is highlighted, and when users have certainty or uncertainty about future tasks. They found out that positive framing had higher acceptance than negative framing, and that users’ were more risk-prone when confronted with negative framing. This was confirmed by the voting activity accomplished in Workshop 02, where a positive and a negatively framed learning message was tested with participants, who stated that the negative framing “sound[ed] like bad advertising, too cumbersome after advertising text, and not clear enough what it’s about” and that “only positive [style] gives the employee a good feeling when tips are displayed.”

FEELING OF CONTROL

In the study about Microsoft Clippy, Swartz (2003), illustrated the feeling of control concept and pointed out that the tips function that was aimed at teaching users new skills and allowing them to use their new skills at their own pace, is more likely to increase the users feeling of control. Additionally, in the Workshop 01, carried out to inform the interaction concept design, participants suggested several ways to reply to the helping messages, referring that they would like to learn in their own time and at their own pace, which confirms the affirmations by Swartz (2003) and informed the three different options to reply to a pop-up with proactive help content in the prototype presented to the users, these options were:” to bookmark the piece of content”, “don’t show again,” and “show again later.”

NUDGING

Finally, Thaler and Sunstein (as cited in Caraban et al., 2019) presented the concept of nudging as a way to support accurate decision-making taking into account the systematic cognitive biases and heuristics that are often involved in the thinking processes; and Caraban et al (2019), accordingly, presented a nudging mechanisms classification to be implemented in technical systems. The study of these nudging mechanisms should be considered in further studies for the evaluation and improvement of the interaction between virtual assistants and users of ERP Systems.

lessons learned

I gained a wealth of knowledge during the development of this project, including valuable lessons on the design process, scientific reading and literature reviewing, qualitative data analysis, workshop design, and scientific report writing. In addition to learning these skills, I was fortunate to work with supportive project supervisors who provided me with guidance and mentorship as I worked towards my academic goals.

The biggest obstacle I faced during the project was the language barrier. This challenge has been a driving force behind my ongoing efforts to improve my German language skills, both through formal study and informal practice.